Choosing the right benchmarking method depends on your campaign size, goals, and resources. Here's a quick breakdown of the three main approaches:

- Manual Benchmarking: Best for small campaigns or one-time reviews. It’s labor-intensive but provides full control over the process.

- Automated Platforms: Ideal for managing multiple campaigns at scale. These tools save time and offer consistent results but come with subscription fees.

- Simulation-Based Benchmarking: Perfect for testing strategies in a controlled environment without financial risks. It requires upfront investment and technical expertise.

Quick Comparison

| Technique | Best For | Key Advantage | Main Limitation |

|---|---|---|---|

| Manual Benchmarking | Small-scale or one-time evaluations | Full control over data | Time-consuming and hard to scale |

| Automated Platforms | Large-scale campaigns, ongoing tracking | Fast, real-time analysis | Subscription costs and learning curve |

| Simulation-Based | High-stakes decisions, scenario testing | No financial risk during testing | High initial setup costs |

Each method has its strengths and weaknesses. Smaller businesses might start with manual benchmarking for control and cost savings, while larger organizations benefit from automation and simulations for efficiency and risk-free testing.

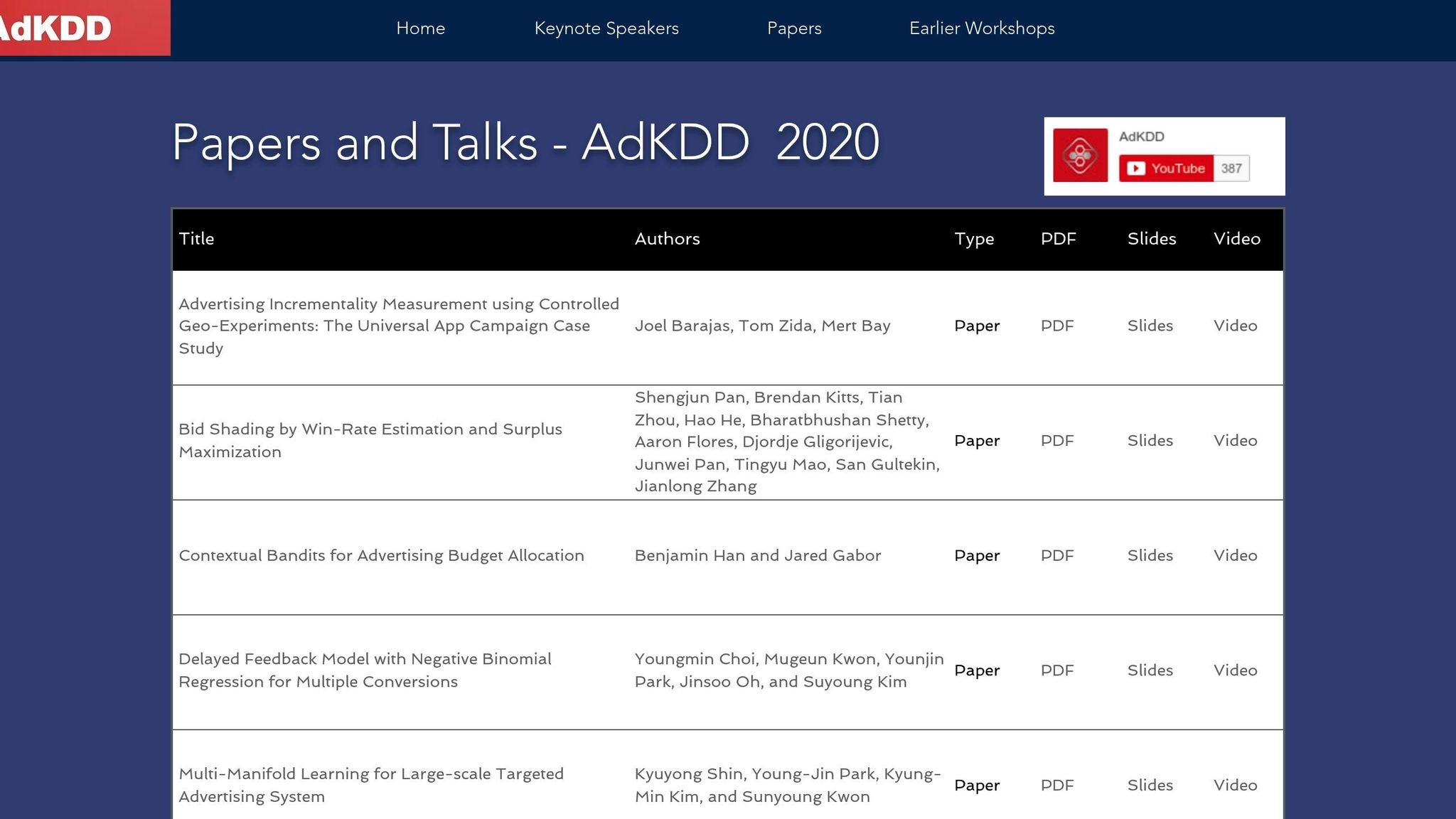

AdKDD 2020: Bid Shading by Win-Rate Estimation and Surplus Maximization

1. Manual Benchmarking

Manual benchmarking involves analyzing bid algorithms by directly working with raw data. This process typically includes downloading campaign data, creating custom spreadsheets, and comparing different bidding strategies or performance across specific time periods.

Efficiency

Manual benchmarking is a time-consuming process. Analysts often spend hours gathering and organizing data from platforms like Google Ads or Microsoft Advertising. While it works for small-scale evaluations, it becomes a labor-intensive task when dealing with multiple algorithms or campaigns spanning various timeframes.

Scalability

This method doesn’t scale well. An individual analyst can manage a handful of campaigns, but as the number of campaigns grows, manual benchmarking quickly hits a limit. For larger PPC operations, this approach can lead to delays and inefficiencies.

Transparency

One of the strengths of manual benchmarking is the clarity it offers. Analysts can examine every data point and clearly document their methodology. This level of detail is particularly useful when presenting findings to executives or clients, as it allows for a full breakdown of the analysis and the reasoning behind any recommendations.

Resource Requirements

Manual benchmarking demands skilled analysts who are proficient with analytics tools. The process can also involve a steep learning curve and requires significant time and effort, which can increase resource costs.

Use Cases

This approach is ideal for specific scenarios, such as in-depth evaluations, small agencies managing limited accounts, one-time strategic reviews, or educational purposes where understanding PPC metrics is the primary goal. Up next, we’ll explore how automated benchmarking tools can simplify these tasks.

2. Automated Benchmarking Platforms

Automated benchmarking platforms simplify the process of evaluating bid algorithms by directly connecting to advertising accounts, pulling in data, comparing performance, and generating reports - all without manual effort. This approach overcomes many of the challenges associated with traditional, manual benchmarking methods.

Efficiency

These platforms dramatically reduce the time needed for benchmarking. Tasks that might take days to complete manually are done in just minutes. They also provide continuous monitoring, real-time insights, and simultaneous analysis across multiple campaigns. This allows analysts to spend their time interpreting data and making strategic decisions, rather than being bogged down by data collection.

Scalability

Automated systems are built to handle operations at scale. They can monitor hundreds of campaigns across various platforms at once, making them essential tools for large-scale PPC efforts.

Beyond managing data volume, these platforms also bring consistency to benchmarking. By standardizing evaluation processes across teams and accounts, they ensure that performance metrics are assessed uniformly - something especially valuable for agencies juggling multiple client portfolios.

Transparency

Transparency can be a mixed bag with automated platforms. While they provide detailed dashboards and in-depth reports, the algorithms and methods used for calculations are often proprietary. This means users can see the results but may not fully understand how they were derived.

That said, many platforms address this by offering drill-down features that let users explore the underlying data behind each metric. Some advanced tools even include audit trails, showing exactly which data points were used and when the analysis was conducted.

Resource Requirements

Enterprise-level automated platforms can come with a hefty price tag, whether billed monthly or annually. However, the costs are often offset by reduced labor expenses and faster, more informed decision-making. Plus, their user-friendly interfaces mean little technical expertise is needed, allowing teams to focus more on strategy than on number-crunching.

Use Cases

These platforms are ideal for organizations that require ongoing performance monitoring. Agencies managing multiple clients and enterprises with significant ad budgets benefit the most. They’re especially effective for competitive analysis, offering consistent tracking and deeper insights. For users of tools like the Top PPC Marketing Directory, these platforms provide a major upgrade in efficiency and analytical capability compared to manual methods.

sbb-itb-89b8f36

3. Simulation-Based Benchmarking

Simulation-based benchmarking allows advertisers to test bidding algorithms in a controlled virtual environment, removing the financial risks tied to real-world experimentation. By using historical data and mathematical models, this method predicts how various bidding strategies might perform under different market conditions.

Efficiency

One of the biggest advantages of simulation-based benchmarking is its speed. Teams can test hundreds of scenarios in just a few hours, compared to the weeks it might take to gather actionable data from live campaigns. This makes it possible to explore factors like seasonal trends and competitor behavior without waiting for real-world results.

However, the initial setup can be time-intensive. Building accurate simulations requires in-depth historical data analysis and careful calibration of algorithms to reflect realistic conditions.

Scalability

These simulations are highly adaptable, capable of testing multiple variables across different market segments. For example, a single simulation can evaluate bid performance across industries, geographic regions, and budget levels - all without the resource limitations of live testing. Automated platforms can handle large volumes of data, making it easier to analyze performance across multiple campaigns.

That said, running complex simulations at scale can demand significant computational power. For instance, analyzing thousands of keywords across numerous campaigns may stretch resources, potentially limiting how many tests can be conducted simultaneously.

Transparency

Simulation-based benchmarking offers clear visibility into testing conditions and variables. Unlike live campaigns, which can be influenced by unpredictable external factors, simulations document every input and assumption. This level of clarity ensures that teams know exactly how results were derived.

However, there’s a catch. While the simulations themselves are transparent, their ability to fully replicate real-world conditions may be limited. Market assumptions used in simulations might not perfectly align with actual market dynamics, introducing a layer of uncertainty.

Resource Requirements

Creating effective simulations requires a combination of specialized knowledge and upfront data investment. Skilled data scientists with expertise in statistical modeling and PPC (pay-per-click) dynamics are essential. Additionally, these models need regular updates to stay relevant as market conditions evolve.

Despite the high initial costs, simulations become more economical over time. Once the system is established, running additional tests costs little compared to live campaign testing, where each experiment requires actual ad spend.

Use Cases

Simulation-based benchmarking is especially useful for high-stakes decisions, such as major changes to bidding algorithms. For enterprise advertisers managing million-dollar budgets, simulations provide a safe space to test strategies without risking significant ad spend.

This method is also ideal for seasonal planning. For instance, retailers can use data from previous years to simulate how different bidding strategies might perform during key shopping periods like Black Friday or the holiday season. Platforms like the Top PPC Marketing Directory can further enhance these efforts by offering resources to refine advanced bidding strategies in a risk-free environment.

Advantages and Disadvantages

Every benchmarking method comes with its own set of strengths and challenges. Knowing these trade-offs can help advertisers decide which approach best suits their campaign goals and available resources.

| Technique | Advantages | Disadvantages |

|---|---|---|

| Manual Benchmarking | • Full control over testing variables • Detailed understanding of campaign specifics • No software costs • Flexible timelines • Direct access to performance data |

• Extremely time-consuming • Higher risk of human error • Hard to scale across multiple campaigns • Requires strong analytical skills • Inconsistent methods can creep in |

| Automated Benchmarking Platforms | • Fast processing of large data sets • Consistent testing methods • Real-time monitoring • Advanced statistical tools • Reduces manual effort |

• Subscription fees can add up • Learning curve to use platforms effectively • Limited customization options • Reliance on third-party systems • Data integration can be tricky |

| Simulation-Based Benchmarking | • No financial risk during tests • Quick evaluation of multiple scenarios • Uses historical data for analysis • Predictive modeling tools • Safe space for testing strategies |

• High initial development costs • Requires data science expertise • May not fully reflect real-world conditions • Needs significant computational power • Regular updates to models are necessary |

Each method has its place, depending on the scale and goals of your campaign. Manual benchmarking gives you complete control but demands time and expertise. Automated platforms streamline processes and deliver consistent results but come with subscription costs and a learning curve. Simulation-based benchmarking minimizes financial risks and allows for scenario testing but requires a hefty upfront investment and technical skills.

For businesses using resources like the Top PPC Marketing Directory, combining these approaches can lead to better insights. Smaller businesses might start with manual methods to build a solid foundation and then transition to automated platforms as their campaigns grow. Larger enterprises could use simulations for strategic planning while relying on automated tools for ongoing optimization. By mixing and matching these methods, advertisers can make benchmarking a key part of their PPC strategy.

Conclusion

When deciding on a benchmarking method, consider the size of your campaign, available resources, and technical know-how. For smaller campaigns with limited budgets, manual benchmarking works well, offering control without extra software costs. On the other hand, automated platforms are better suited for managing multiple campaigns, delivering consistent and real-time results. For larger enterprises, simulation-based benchmarking is ideal - it allows for testing strategies in a risk-free environment but requires a significant upfront investment and specialized expertise.

A blended approach often works best. Start with manual benchmarking for smaller-scale efforts, transition to automated platforms as you grow, and incorporate simulations when planning long-term strategies.

Budget plays a big role in your choice. Manual methods demand time but save on software fees. Automated platforms come with recurring subscription costs, while simulations require higher initial investments and skilled professionals to operate effectively.

Selecting the right tools can also simplify your benchmarking process. With so many performance marketing tools available, narrowing down your options can feel overwhelming. This is where resources like the Top PPC Marketing Directory come in handy. This platform offers a curated list of bid management and performance tracking tools, helping you compare options and find the best fit for your benchmarking needs.

FAQs

How can I choose the best benchmarking technique for my campaign goals?

To pick the best benchmarking method for your campaign, start by pinpointing your main objectives - whether that's boosting ROI, driving more conversions, or cutting down cost-per-acquisition. Once you've nailed down your goals, think about the size and complexity of your campaign.

For instance, manual bid testing gives you greater control but demands a lot of time and effort. Rule-based bidding brings consistency and structure but can fall short in adapting to fast-changing campaigns. Meanwhile, AI-driven automation works well for scaling and efficiency, though it might not offer the same level of transparency.

The key is to select a method that matches your budget, your preferred level of control, and your performance goals, ensuring it aligns with the overall success of your campaign.

What challenges can arise with automated benchmarking for bid algorithms, and how can they be addressed?

Automated benchmarking platforms for bid algorithms often face hurdles like restricted access to diverse, high-quality datasets and uneven evaluation standards. These limitations can complicate the process of making fair and accurate comparisons of algorithm performance.

To overcome these obstacles, businesses and researchers should prioritize building shared, well-rounded datasets, implementing consistent benchmarking practices, and encouraging collaboration across the industry. These efforts can greatly enhance the accuracy and usefulness of benchmarking initiatives.

Can simulation-based benchmarking accurately reflect real-world market conditions, and how should I interpret the results?

Simulation-based benchmarking offers helpful insights but falls short of capturing the complexity of real-world market dynamics. Variables like unexpected user behavior, shifting market trends, and competitor strategies are challenging to simulate with precision.

When reviewing the results, pay close attention to the performance metrics used in the simulation, as they play a key role in shaping the outcomes. Remember, algorithms might respond differently in live settings compared to simulations. Use these results as a directional tool, but always back them up with real-world testing to ensure your bid optimization strategies hold up under actual conditions.